The Content Wrangler

When AI Becomes the Search Interface, Structure Becomes the Advantage

Search is no longer about links

Technical writers are watching the same shift everyone else is watching: search engines are turning into AI-driven answer engines. Instead of sending users to web pages or help sites, these systems increasingly synthesize and deliver answers directly inside search results pages and chat interfaces.

For publishers who depend on referral traffic 📈, this raises alarms. For technical writers, it raises a different — and potentially more interesting — question:

If AI systems are now the primary readers of our content, are we writing in a way machines can actually understand?

Uncomfortable Truth: AI Can’t Make Sense of Unstructured Content Blobs

Large language models perform best when they can identify intent, scope, constraints, and relationships. Unstructured prose forces them to guess. That guessing shows up as hallucinations, incomplete answers, or advice taken out of context.

👉🏾 PDFs, long HTML pages, and loosely structured markdown were designed for human reading, not machine reasoning.

They obscure:

What task the user is trying to complete

Which product version applies

What prerequisites or warnings matter

Whether an instruction is conceptual guidance or procedural truth

When AI search engines ingest this kind of content, they flatten it. The result they provide may sound fluent, but it’s dangerously lacking in the precision department.

The Real Issue Isn’t AI — It’s Accountability

In AI and Accountability, Sarah O’Keefe argues that organizations are chasing the wrong goal with generative AI in content creation. Many businesses describe success as producing “instant free content,” but that’s a flawed metric. The true organizational goal isn’t volume of output — it’s content that supports business objectives and that users actually use.

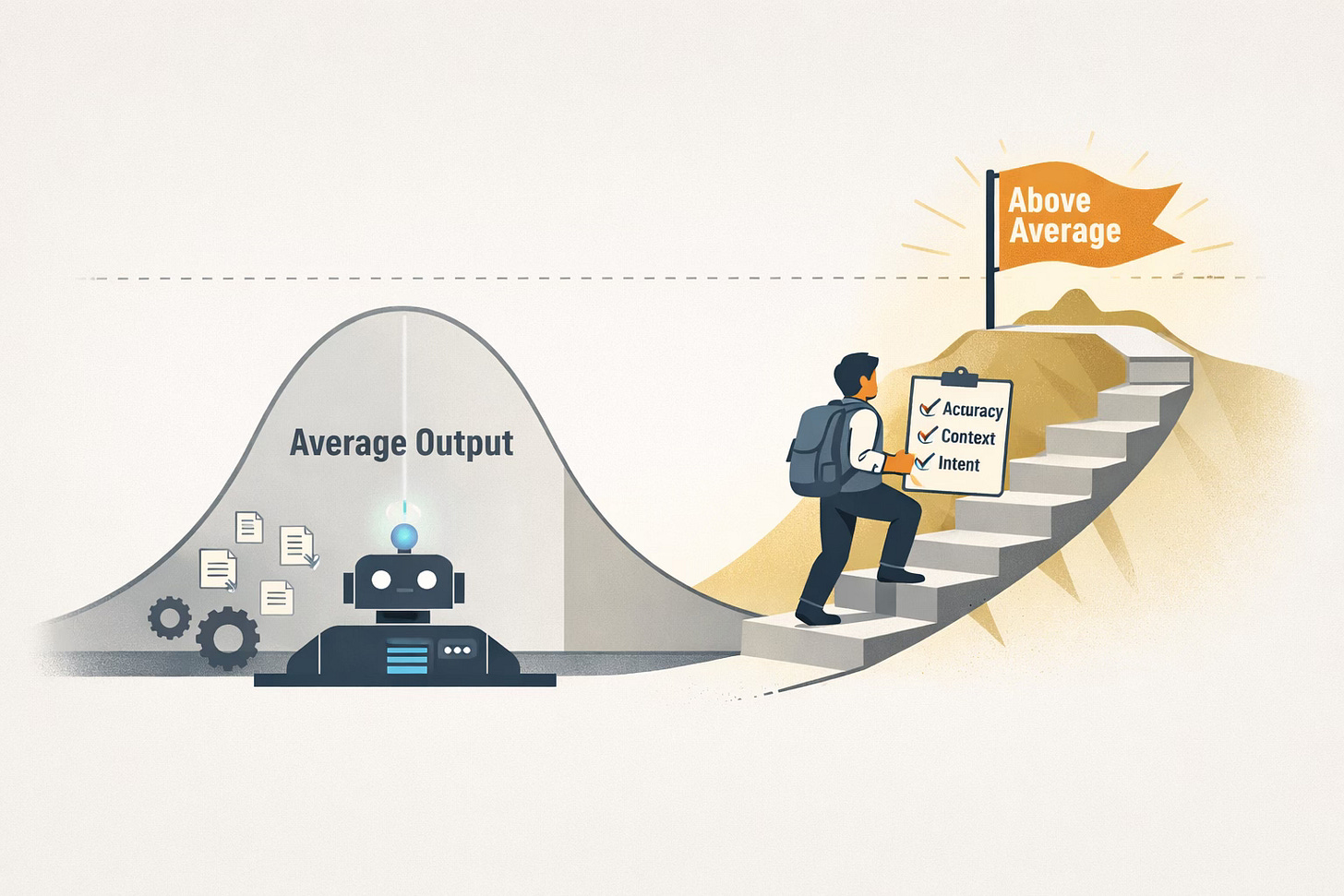

AI Produces Commodity Content

O’Keefe points out that:

Content is already treated as a commodity in many marketing teams

Generative AI excels at producing generic, average content quickly

That’s fine for low-level output (but it doesn’t create quality, accurate, domain-specific content on its own)

When the goal is high-value content, simply doing “more” with AI fails

O’Keefe mirrors broader industry cautions that AI is very good at pattern synthesis but struggles with original, accurate, context-aware creation without structured inputs.

AI Can Raise Average Quality — But It Won’t Raise Above Average

O’Keefe emphasizes that:

If your current content is below average, AI might improve it

If your content needs to be above average, generative AI does not reliably get you there without expert oversight

This performance gap stems from how AI models generate outputs — they produce the statistical average of what they’ve been trained on

This is an important distinction for tech writers whose docs must be precise, technically correct, and trustworthy.

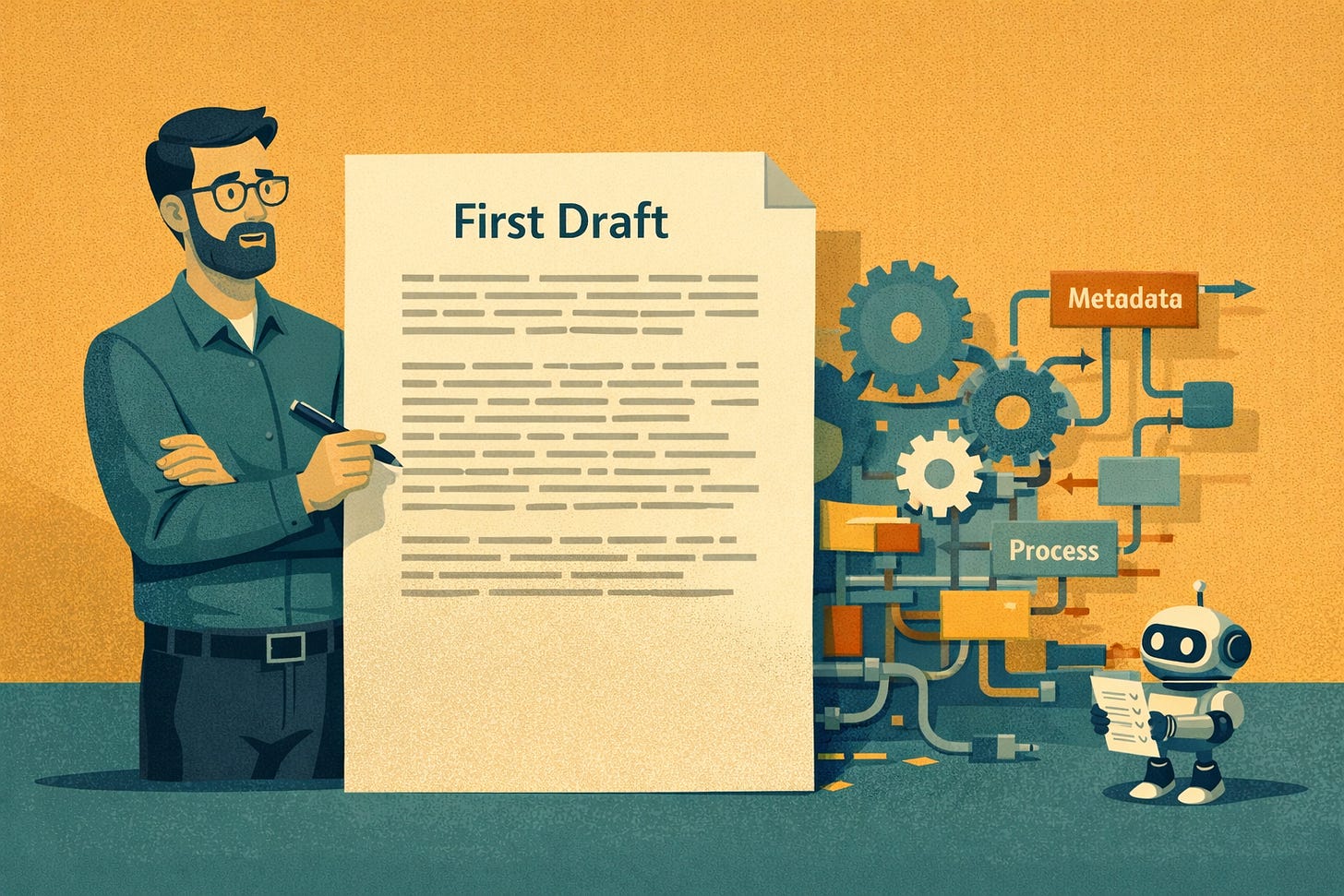

Accountability Doesn’t Go Away With AI

A central theme is: authors remain accountable for the accuracy, completeness, and trustworthiness of content they publish, regardless of whether AI assisted in generating a draft. O’Keefe gives practical examples:

AI can article-spin but still generate bogus reference

AI won’t justify legal arguments or identify critical edge cases

Errors from AI are still your responsibility if you publish the content

This has real implications for technical documentation where accuracy and liability matter.

The Hype Cycle Isn’t Strategic

O’Keefe warns that focusing internally on “how heavily we’re using AI” is a hype-driven metric, not a strategic one. Tech writers and content leaders should reframe the conversation:

From “how much AI are we using?”

To “how efficient and reliable is our content production process?”

To “how good is the content we deliver for its intended use?”

This shift helps teams build accountability into their workflows instead of chasing novelty.

What This Means for Tech Writers

For tech writers specifically, here are the distilled takeaways:

AI should be a tool — not the goal: Use AI to handle repetitive, well-defined tasks, while keeping humans responsible for correctness and intent

Focus on quality over quantity: Audiences value clarity and accuracy more than volume

Maintain author accountability: Even when AI suggests or drafts content, the author still owns the final product’s correctness and accuracy, especially for technical and regulated content

Measure impact, not usage: Shift performance evaluation from AI adoption rates to content effectiveness and user outcomes

👉🏾 Read the article. 🤠

2026 State of AI in Technical Documentation Survey

The Content Wrangler and Promptitude invite you to participate in our 2026 State of AI in Technical Documentation Survey, a five-minute multiple-choice survey that aims to collect data on where AI appears in documentation workflows, which tasks it supports, how often it is used, and the challenges that limit broader adoption.

We also seek to understand organizational support, tooling gaps, and where documentation professionals believe AI could deliver the most value in the future.

After the survey closes, we will analyze the results and publish a summary report highlighting the trends, patterns, and shared challenges across the industry. The goal is to provide documentation leaders and practitioners with credible data to guide decisions, advocate for better tools and training, and set realistic expectations for AI’s role in technical documentation.

Complete the survey and provide a valid email address to receive a copy of the final survey results report via email shortly after the survey closes.

The Content Wrangler is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Discover How Semantic Structured Content Powers AI Solutions at DITA Europe 2026

It’s not too late! I hope you'll join me in Brussels at Hotel Le Plaza Brussels, February 2-3, 2026, for DITA EUROPE 2026 — the conference for technical writers and information developers looking to build advanced information management and delivery capabilities. If your technical documentation team produces content using the Darwin Information Typing Architecture (DITA) — or you’re considering doing so‚ this is the conference for you.

👉🏼 Grab your tickets now! Save 10% off the cost of registration using discount code — TCW — at checkout.

I’ll be delivering a talk with Dominik Wever, CEO of Promptitude — Powering AI and Virtual Humans with DITA Content — during which we’ll show how we built an AI-powered bot that delivers trustworthy, contextually accurate answers using technical documentation authored in DITA XML. Then, we’ll take it a step further—layering an interactive video agent (a virtual human) on top of the bot to create an engaging, human-like interface for delivering content.

Attendees will learn why structured content is essential for powering intelligent systems, how to responsibly connect DITA to AI models, and what opportunities virtual humans offer for technical communication. Whether you’re curious about future-ready documentation practices or looking for practical ways to extend the value of your content, this talk will offer both inspiration and concrete lessons.

DITA Europe is brought to you by the Center for Information Development Management (CIDM) — a membership organization that connects content professionals with one another to share insights on trends, best practices, and innovations in the industry.

CIDM offers networking opportunities, hosts conferences, leads roundtable discussions, and publishes newsletters to support continuous learning. CIDM is managed by Comtech Services, a consulting firm specializing in helping organizations design, create, and deliver user-focused information products using modern standards and technologies.

Day One: February 2, 2026

A large portion of Day 1 focuses on how AI actually behaves when it meets real-world documentation systems and legacy content — warts and all.

Key focus includes:

AI benefits and failure modes in real-world use

Aligning AI output with style guides and editorial standards

Enhancing AI results through better context, tools, and editing workflows

Deploying documentation directly to AI systems (not just to humans)

February 2 sessions emphasize that AI quality is constrained by content structure, governance, and determinability:

AI in the Real-World: For Better and Worse — Lief Erickson

Win Your Budget! — Nolwenn Kerzreho

AI + DocOps = SmartDocs — Vaijayanti Nerkar

DITA in Action: Strategies from Real-World Implementations — Maura Moran

Determinability and Probability in the Content Creation Process — Torsten Machert

From Binders to Breakthroughs: Cisco Tech Comms’ DITA Journey — Anton Mezentsev & Manny Set

Enhancing AI Intelligence: Leveraging Tools for Contextual Editing — Radu Coravu

From SMB’s to Enterprises: Real-World Content Strategy Journeys — Pieterjan Vandenweghe and Yves Barbion

Aligning AI Content Generation with Your Styleguide — Alexandru Jitianu

A Transition From One CCMS to Another – Lessons Learned — Eva Nauerth and Peter Shepton

Cutting the Manual Labor: Scaling Localized Software Videos — Wouter Maagdenberg

Revolutionizing Docs: DITA, Automation & AI in Action – Akanksha Gupta

Future of Documentation Portals: From DevPortal to Context Management System — Kristof Van Tomme

How AI is Boosting Established DITA Localization Practices — Dominique Trouche

Trust Over Templates: Skills That Make A Content Transformation Work — Amandine Huchard

Powering AI and Virtual Humans with DITA Content — Scott Abel

DITA 2.0 + Specialization Implementation at ALSTOM — Thomas Roere

Deploying Docs to AI — Patrick Bosek

I’d Like Mayo With That: Our Special Sauce for DTDs — Kris Eberlein

Day Two: February 3, 2026

Transforming Customer Support Through Intelligent Systems — Sharmila Das

DITA and Enterprise AI — Joe Gollner

Agentic AI: How Content Powers Process Automation — Fabrice Lacroix

Why DITA in 2026: The AI Age of Documentation — Deepak Ruhil and Ravi Ramesh

Content Automation 101: What to Automate and What Not To — Dipo Ajose-Coker

Empowering DITA with Standards for AI in Safety-Critical Areas — Martina Schmidt and Harald Stadlbauer

Taxonomy For Good: Revisiting Oz With Taxonomy-Driven Documentation — Eliot Kimber and Scott Hudson

Upgrading the DITA Specification: A Case Study in Adopting 2.0 — Robert Anderson

Hey DITA, Talk To Me! How iiRDS Graphs Infer Answers — Mark Schubert

The Secret Life of Technical Writers: Struggles, Sins, and Strategies — Justyna Hietala

Better Information Retrieval in Bringing DITA, SKOS, iiRDS Together — Harald Stadlbauer, Eliot Kimber, Mark Schubert

If They Don’t Understand, They Can’t Act: Comprehension in DITA — Jonatan Lundin

DITA, iiRDS, and AI Team Up for Smart Content Delivery — Marion Knebel, Gaspard Bébié-Valérian

Pretty As a Screenshot: Agile Docs Powered by QA Tools — Eloise Fromager

Bridging the Gap – Connect DITA, Multimodal Data via iiRDS — Helmut Nagy, Harald Stadlbauer

Guardrails for the Future: AI Governance and Intelligent Information Delivery — Amit Siddhartha

Highly Configurable Content using DITA, Knowledge Graphs and CPQ-Tool — Karsten Schrempp

Building a Content Transmogrifier: Turning Reviews into Something Better — Scott Hudson, Eliot Kimber

👉🏼 Grab your tickets now! Save 10% off the cost of registration using discount code — TCW — at checkout.

The Content Wrangler is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Why AI Search Needs Intent (and Why DITA XML Makes It Possible)

Search used to be about matching keywords. Today, AI-powered search engines promise to “understand what users mean.” In practice, they struggle with something far more basic: intent.

Intent is the goal behind a search query—the job the user is trying to get done at that moment. Are they trying to learn, do, fix, compare, or verify? Intent answers why the question was asked, not just what words were typed.

For technical content, intent matters more than phrasing. A query like “configure OAuth token refresh” can signal at least four different needs:

a conceptual explanation,

a step-by-step task,

a troubleshooting scenario,

or a confirmation that a system even supports the feature.

The words don’t change. The intent does.

Why AI Search Engines Struggle With Intent

AI search systems are trained on language patterns, not user goals. That creates several hard problems:

What is the Darwin Information Typing Architecture (DITA)?

The Darwin Information Typing Architecture (DITA) is an XML-based standard for creating, managing, and publishing technical documentation as modular, reusable content. OASIS maintains the DITA standard and provides access to information about its usage.

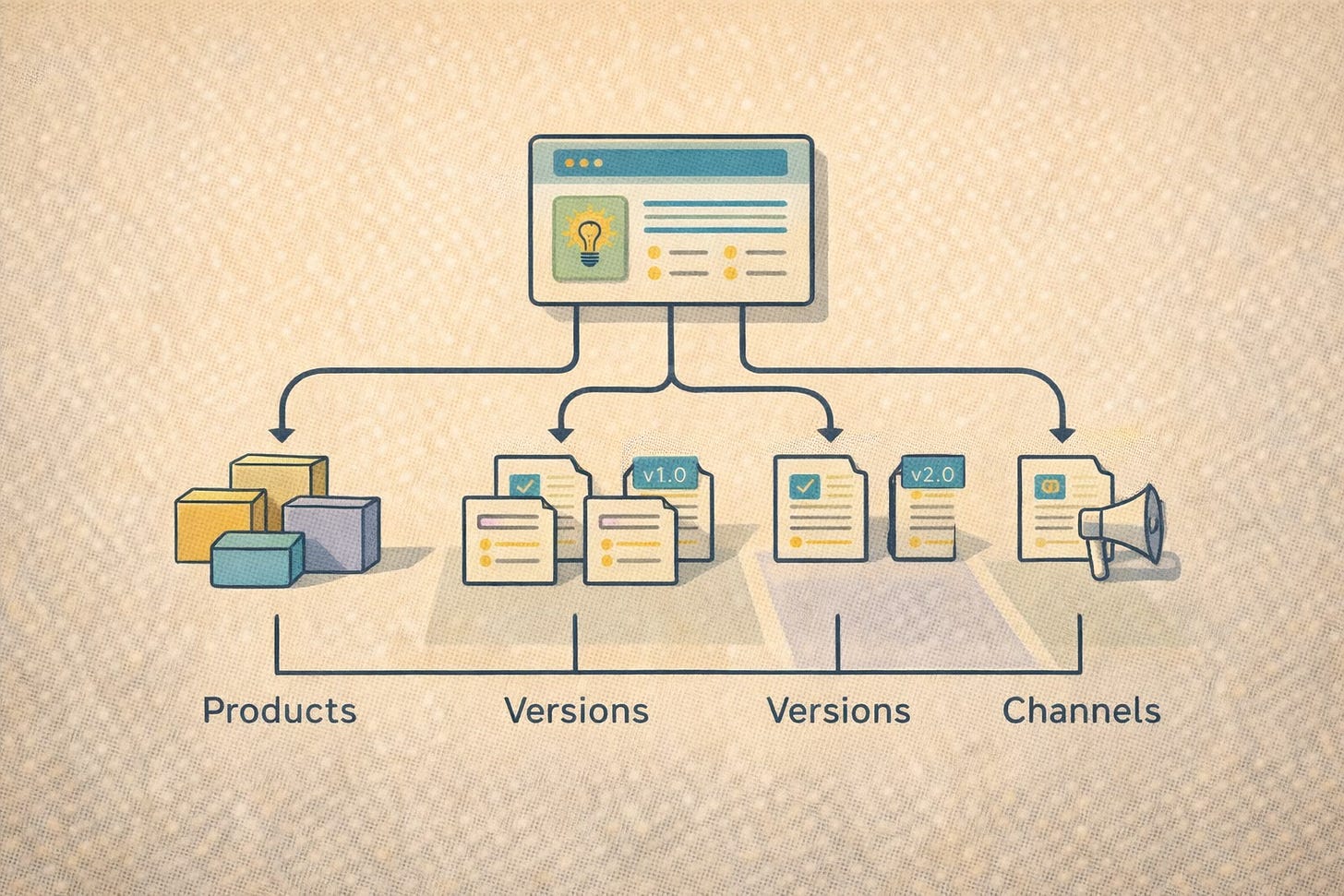

DITA matters because it forces clarity and discipline at the point of creation. Each topic has a single intent — concept, task, or reference — which makes content easier for people to scan and easier for machines to process. That structure enables reliable reuse, reduces duplication, and improves consistency across products, versions, and channels. Writers stop rewriting the same explanation five times and start managing one trusted source of truth.

The Content Wrangler is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

DITA is also built for scale and change. Its specialization and inheritance model lets organizations extend the standard without breaking it, while maps assemble topics into many deliverables from the same content set. The result is faster updates, cleaner localization workflows, and publishing pipelines that can target PDFs, HTML, portals, and help systems from the same content base.

Finally, DITA future-proofs documentation for AI-driven delivery. Because topics are semantically typed and structurally consistent, search engines, chat systems, and retrieval-augmented generation pipelines can identify intent and return precise answers instead of dumping entire pages. In an era where documentation feeds intelligent systems, DITA gives technical writers a way to make content both human-readable and machine-ready.

DITA content is best managed in a component content management system designed specifically to help technical writers create, manage, and deliver documentation to the humans and machines that require it. 🤠

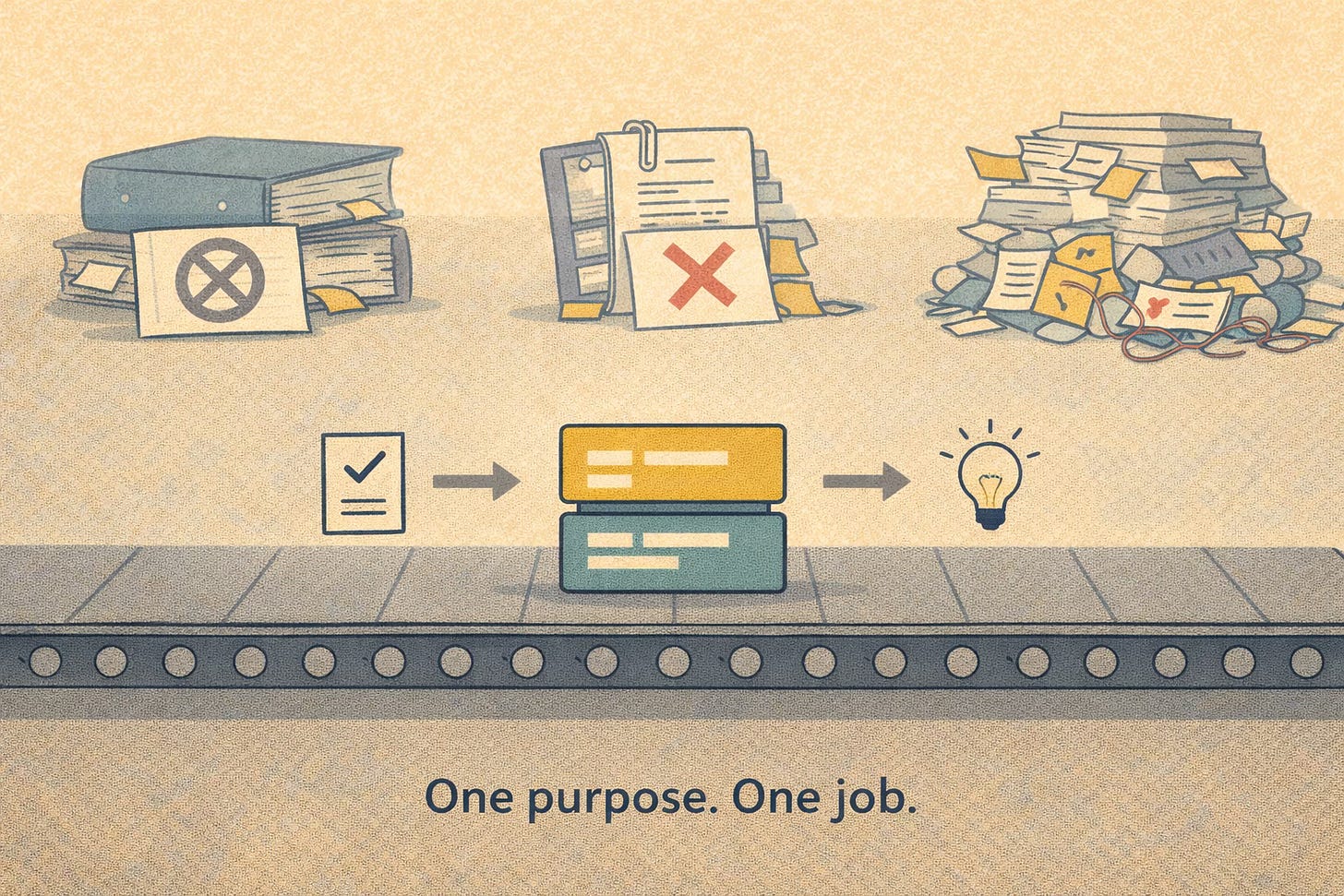

What a DITA Topic Is (and Why It Matters)

If you work with the Darwin Information Typing Architecture (DITA) — or plan to in the future — you likely to hear the word topic constantly. It sounds obvious; until you try to explain it to someone outside the DITA world, or worse, to someone who thinks a topic is just “a page” or “a section.” It isn’t.

A DITA topic is a small, self-contained unit of information designed to answer one specific user need. Not a chapter. Not a document. Not a dumping ground for everything related to a feature. One purpose. One job.

The Content Wrangler is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

A DITA topic has three defining characteristics

1. It has clear intent

Every DITA topic exists to do exactly one thing: explain a concept, describe a task, or provide reference information. This is not an accident. DITA enforces intent through information typing—most commonly concept, task, and reference.

Related: Understanding DITA Topic Structure

That constraint forces writers to decide why the content exists before writing it. Is the user trying to understand something? Do something? Look something up?

📌 If you cannot answer that question, you do not yet have a topic.

This is one of the reasons DITA content works so well with search, delivery platforms, and AI systems. Intent is explicit, not implied.

2. It Stands on Its Own

A DITA topic is designed to be meaningful outside the context of a book or manual. Someone might encounter it through search, a chatbot, an embedded help panel, or an AI answer engine. Regardless of how they encounter it the topic must still make sense.

That means no “as described above,” no unexplained acronyms, and no reliance on surrounding pages or section headers to fill in critical gaps. Context travels with the topic through metadata, structure, and reuse — not through proximity.

This independence is what enables reuse at scale without copy-and-paste chaos.

3. It’s Structurally Predictable

A DITA topic follows a consistent structural pattern: a title, a body, and clearly defined elements inside. Tasks have steps. References have tables and properties. Concepts explain ideas, not procedures.

That predictability is not just for writers; it is for machines (computers, specifically). Structured topics allow systems to classify, filter, assemble, personalize, and deliver content dynamically. This is why DITA topics are increasingly valuable in AI-driven environments.

Large language models do not “understand” documents. They perform far better with small, well-typed, semantically clear units of information.

Why This Matters Now

As search engines turn into answer engines and documentation gets consumed in fragments, the DITA topic becomes the atomic unit of knowledge delivery. Writers who understand how to design strong topics are not just explaining products; they are engineering intent-aware, machine-processable content that feeds knowledge systems.

If you want AI solutions to give users the right answer instead of a plausible one, start with better topics.

If your content:

clearly states its purpose,

uses consistent information types,

exposes relationships through structure and metadata,

AI can reason over it. If it doesn’t, AI guesses.

Structured, semantically augmented DITA content allows AI systems to:

distinguish learning intent from execution intent,

select the right topic type for the moment,

avoid blending procedural steps into conceptual explanations,

reduce hallucination caused by context collapse.

In short, it helps AI choose instead of invent.

What This Means For Tech Writing

Technical writers are no longer just explaining products. They are designing intent-aware knowledge systems.

Every time you:

choose a topic type,

enforce information typing discipline,

add meaningful metadata,

break monolithic docs into purpose-driven components,

you make it easier for AI search engines to deliver the right answer, not just an answer.

In an AI search world, visibility depends less on keywords and more on whether machines can understand what your content is for.

DITA doesn’t just support that future. It anticipates it. 🤠

The Content Wrangler is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

From Data to Understanding: The Work Machines Can’t Do Without Tech Writers

Technical writers live in a strange middle ground. We translate what systems produce into something people can understand and use. Lately, that middle ground has gotten more crowded. Data teams, AI teams, and platform teams increasingly want documentation to behave like data—structured, atomized, and machine-friendly.

The assumption is that machines thrive on raw inputs and that humans can be served later by whatever the system generates.

That assumption is wrong!

The uncomfortable truth is this: data without context doesn’t just fail people—it fails machines too. The failure simply shows up later, downstream, disguised as “AI output.”

Most documentation problems trace back to a quiet but persistent confusion between four related — but very different — concepts: data, content, information, and knowledge.

They are often used interchangeably in meetings and strategy decks, as if they were synonyms. They are not.

Each represents a different stage in the creation, interpretation, and application of meaning. When those distinctions blur, teams ship docs that look complete but fail in practice (leaving both users and AI systems to guess at what was never clearly expressed).

A simple example, repurposed from Rahel Anne Bailie’s self-paced workshop for the Conversational Design Institute, Mastering Content Structure for LLMs, makes this painfully clear.

A Data Example

Imagine encountering the number 242.

On its own, it is nothing more than a value. Humans can’t reliably interpret it, and neither can an AI system. It could be a temperature, an identifier, a page number, or something else entirely. There is no intent encoded in it. No audience implied. No action suggested. It is easy to store and transmit, but useless for understanding.

Shifting Roles - From Content Professionals to Ontologists

Content pros are encountering new challenges in the Large Language Model (LLM) and agent era, highlighting the need for a different approach to authoring tools. Trust in LLM-generated content is the next hurdle, and transparency about the LLM’s information sources is needed.

Underscoring this, LLMs’ answers are only as good as the source content, which, in most cases, will be proprietary and will not be part of an LLM’s general intelligence about non-proprietary sources like blog posts. So the LLM needs to be an expert on your product, and someone needs to author this content, either with or without AI assistance.

The Current Paradigm

Right now, most authoring is aimed at publishing content for people. While chatbots can use this information to answer questions, the content isn’t designed with agents in mind. Humans remain an important audience, but we also need source content that works well for both LLMs and people. Similar to how web design shifted to a “mobile-first” approach, we could move to an “agent-first” design for documentation. This doesn’t mean humans are less important; it just means that focusing on agents is more challenging but will still meet human needs.

Related: AI-Powered Authoring: Will Machines Replace Technical Writers?

The Shifting Role of Authors

Authors should always retain the freedom to “just type something” in an editor, but moving forward, a bit more discipline will be required. As LLMs and agents become more adept at generating content (especially when supplied with verifiable facts) the role of the human author is evolving. Their primary responsibility will shift toward establishing a foundation for content verification. This includes building taxonomies, which might be generated automatically from source material and then refined by the author, or created manually for maximum control by a discerning analyst. Additionally, authors will help map product concepts to ontologies to enable deeper and more meaningful interconnections.

Taxonomies: How to Find Things

A taxonomy organizes items into categories within a clear hierarchical structure, much like a tree with branches and sub-branches. Each item is assigned to a category, making it easier to find by moving from broad groupings to more specific ones. These relationships typically take the form of parent-child or sibling connections.

We see taxonomies every day—for example, website navigation menus that let us drill down from broad categories to more specific items.

Why do taxonomies matter?

According to Earley Information Science:

Related: What Is the Difference between Taxonomy and Ontology?

Why Tech Writers Don’t “Have Time for AI” — and Why That’s the Whole Problem

If you’re a technical writer who has been told to “start using AI,” there’s a good chance this instruction arrived the same way most bad ideas do: casually, optimistically, and with absolutely no adjustment to your workload.

You were already juggling three releases, a backlog of outdated content, an SME who responds exclusively in emojis, and a publishing system held together by hope and duct tape. Now you’re also supposed to “use AI” — presumably in the five spare minutes between standup and your next fire drill.

When AI fails to deliver miracles under these conditions, leadership often concludes that AI is overrated. This is unfair to you, and frankly, unfair to the robot.

AI Isn’t a Magic Button — It’s a Process Multiplier

AI does not save time by default. It reallocates time.

To use AI responsibly in technical documentation work, you need time to:

Experiment with real content, not toy examples

Learn which tasks benefit from AI and which ones absolutely do not

Validate output, because “confidently wrong” is still wrong

Adjust workflows, prompts, review steps, and quality checks

If none of that time exists, AI use collapses into exactly one activity: drafting text faster.

Drafting is fine. Drafting is useful. Drafting alone is not transformation.

What Actually Happens Under Time Pressure

When documentation teams operate under constant time pressure, AI adoption tends to play out in a very predictable way. Writers reach for AI to generate first drafts because it offers the quickest visible win and creates the feeling of progress. The problem is that speed at the front of the process often pushes effort to the back.

Review cycles grow longer as someone has to verify facts, check tone, and untangle confident-sounding mistakes. Quality concerns begin to surface, not because AI is inherently flawed, but because it is being asked to compensate for a lack of time, structure, and planning.

As these issues accumulate, trust in the tool starts to erode. Conversations quietly shift from “How can we use this better?” to “This is risky,” and then to “Let’s limit where this can be used.”

Almost no one pauses to ask the more uncomfortable question: whether the real problem is that writers were expected to redesign workflows, adopt new practices, and safeguard quality without being given the time to do any of that work properly.

Instead, AI becomes the convenient scapegoat. Workloads stay the same, pressure remains high, and the system continues exactly as it did before—just with one more abandoned initiative added to the pile.

Why This Isn’t a Personal Failure

If you feel like you “don’t have time for AI,” that does not mean you are behind, resistant, or doing it wrong. It means your organization has not yet made room for change.

AI adoption requires slack in the system. Not endless slack. Just enough breathing room to think, test, and improve without breaking production.

Without that space, AI becomes another demand layered onto an already impossible job. And no technology (no matter how hyped) can fix that.

The Real Conversation We Need to Be Having

The question is not, “Why aren’t writers using AI more?”

The question is, “What work could we stop doing, simplify, or delay so writers can safely integrate AI into how documentation actually gets produced?”

Until that question is answered, AI will remain a side experiment — interesting, occasionally helpful, and permanently underpowered.

And the robot will continue to be blamed for a problem it did not create.

2025 Structured Content Survey

During the 2025 The LavaCon Content Strategy Conference the folks at Heretto gave away some cool merch (hats, t-shirts, and sweatshirts ). I’m pictured here with the “content” t-shirt 👕 — but in retrospect, I should have grabbed a sweatshirt —> because ❄️winter🥶 is here!

Now it’s your chance to score a “content” sweatshirt

Here’s how: Complete the Heretto Structured Content Survey (2 minutes max) and you’ll qualify to be entered into the drawing.

Complete the survey before December 31, 2025 to qualify. Winners to be contacted in January 2026.

The Content Wrangler is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

The 2025 State of Customer Self-Service Report Is Out — See How Your Content Stacks Up

Organizations everywhere are rethinking how they deliver customer support, and the smartest teams are turning to AI, structured content, and stronger cross-functional collaboration to do it. Earlier this year, Heretto released the State of Customer Self-Service Report, a data-rich look at how leading companies are transforming self-service into a strategic advantage. If you haven’t downloaded it yet, now is the time:

This report doesn’t just highlight trends. It reveals what top performers actually do to create self-service experiences that reduce effort, deflect tickets, and give customers the answers they need the moment they need them.

Download the State of Customer Self-Service Report now and see exactly where your organization excels, where your gaps are, and what steps you can take to improve.

Inside The State of Customer Self-Service Report

You’ll learn how organizations are using:

AI to power smarter, more personalized support. The report explores how teams are adopting AI responsibly, pairing machine intelligence with strong content foundations to improve relevance and accuracy across the customer journey.

Structured content to move beyond scattered, inconsistent knowledge bases. High performers are investing in reusable, well-governed content that scales across channels and markets — and makes AI far more reliable.

Cross-functional collaboration to break down silos between product, support, documentation, and marketing. Teams that treat content as shared infrastructure, rather than as a departmental afterthought, deliver better self-service outcomes and faster ROI.

Why This Matters

Self-service is now the first stop for a growing majority of customers. That shift raises expectations for clarity, findability, consistency, and personalization. What you’ll see in the report is that organizations who meet those expectations aren’t relying on guesswork; they’re aligning strategy, structure, and technology to deliver high-quality content at scale.

The report also explores the barriers teams run into: limited staffing, siloed tooling, unclear ownership, and inconsistent processes. More importantly, it outlines how leaders overcome those challenges and build momentum.

Next Step: Benchmark Your Content Against Industry Realities

If your organization delivers customer-facing content (technical docs, support articles, onboarding flows, or product guidance) you should download this report and see how your strategy compares to the findings.

Use it as a benchmark. Use it as a wake-up call. Use it as the evidence you need to advocate for smarter, more scalable content operations.

The insights are available now. Your competitors may already be acting on them. Don’t wait!

Download the State of Customer Self-Service Report now and see exactly where your organization excels, where your gaps are, and what steps you can take to improve. 🤠

The Content Wrangler is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

How To Advocate For The Structural Investments Your AI Initiatives Actually Require

Everyone wants AI. Few leaders want to think about how it actually works. If you’ve been told to “use AI” without being given the time, structure, or systems needed to make that request anything other than comedy, you already understand the tension behind this whole conversation.

Technical writers are being asked to conjure miracles from the literary equivalent of a junk drawer. Leadership wants smarter chatbots. They do not want to hear that the chatbot cannot perform surgery on a heap of inconsistent topics last updated during the dot-com bubble.

So here we are: expected to make AI sing while working with content that can barely hum.

Why “Unsexy” Structural Work Determines AI Success

This is why structural investments matter. Not “nice-to-have someday” investments — the foundational work that determines whether any AI initiative lives, dies, or produces the kind of answers that make support teams question their career choices. When you bring this up, you may be met with the blank stare reserved for people who talk about metadata in public. That’s fine. Your job isn’t to dazzle them. Your job is to translate structural needs into something leaders recognize as business value, not writerly fussiness.

Start with a shared definition. Structural investment isn’t mysterious. It’s the unglamorous backbone of any AI program: structured content, consistent terminology, clean versioning, healthy repositories, sensible taxonomies, and governance that doesn’t rely on six people remembering to “just ping each other.”

These aren’t luxuries. They’re the conditions that determine whether AI can find what it needs, recognize what it sees, and deliver answers that don’t read like refrigerator poetry.

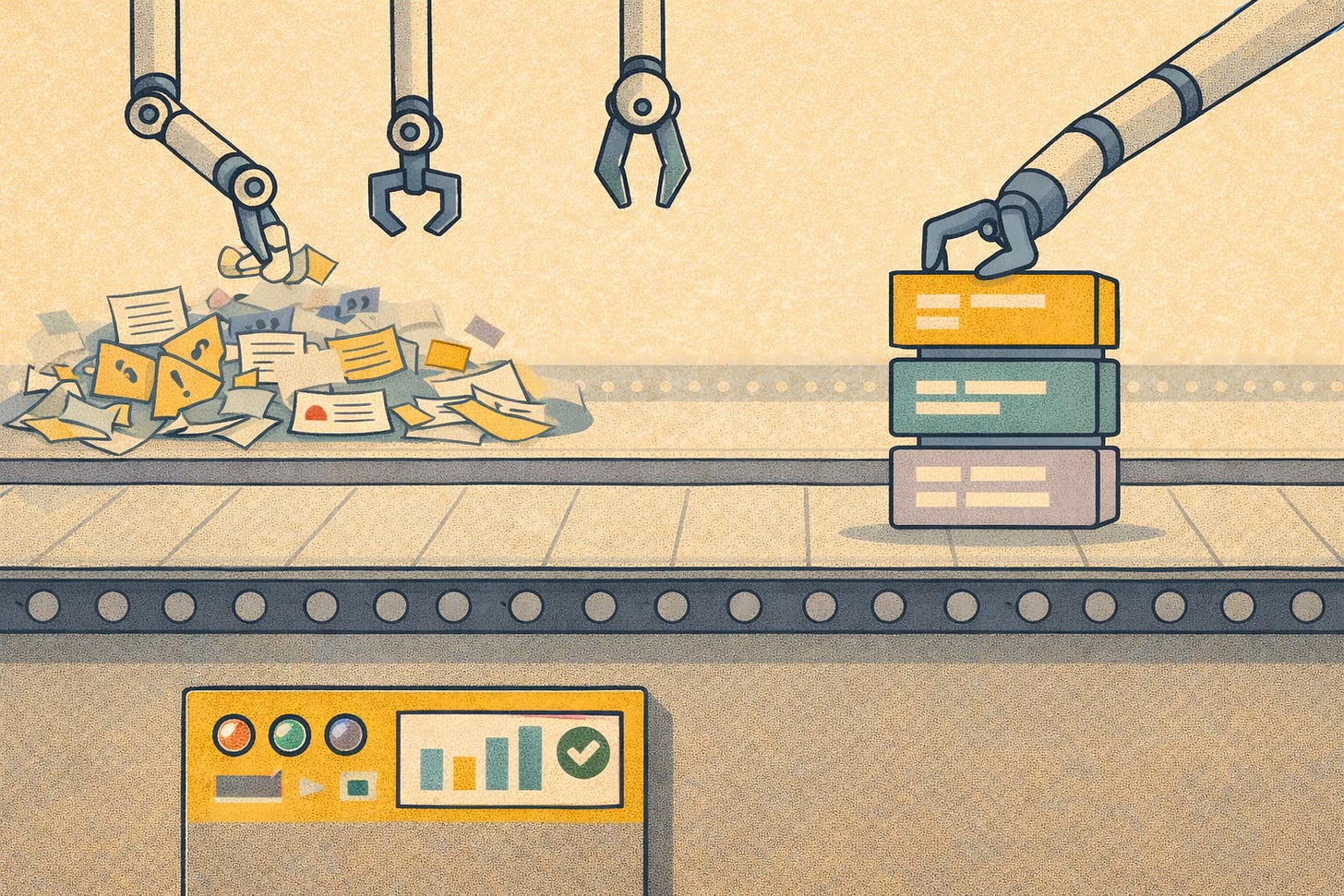

Why AI Falls Apart Without Structure

You already know why these foundations matter. AI needs patterns. It needs clarity. It needs content that behaves predictably. Hand an AI a pile of duplicative, contradictory, unlabeled topics and it will react like any reasonable being: it will guess. And it will guess poorly. When terminology is inconsistent, retrieval collapses. When topics are half-updated, summarization breaks. When metadata is missing, routing becomes improvisational theater. None of this is AI’s fault. The systems simply can’t work when the content isn’t prepared to support them.

But here’s the real trick: when you advocate for these fixes, don’t talk to leadership in the language of writers. They don’t wake up in the morning worrying about chunking strategies or rogue taxonomies. They think in terms of efficiency, risk, and repeatability. They care about faster onboarding. Lower support costs. Reduced rework. Improved customer experience. These are all things structural improvements deliver — they just don’t sound like they’re about topic types.

So translate the need. “We need metadata” becomes “AI tools cost more to maintain and produce less reliable output when content isn’t findable.” “We need better structure” becomes “Support tickets rise because AI can’t distinguish which answer is current.”

Shift the conversation away from what you want and toward what the business needs — because they’re the same thing, even if the vocabulary differs.

Start Small, Show The Pain, And Make The Case

Advocacy works best when you stop trying to fix everything at once. Start with a small audit of the pain everyone already knows about: duplicated content, outdated topics, inconsistent terminology, missing metadata, chaotic versioning. These problems are not secrets; most organizations simply lack the language to connect them to AI performance. You can make the connection clear. Collect examples of AI outputs that went sideways because of structural issues. Show the time your team loses rewriting machine-generated answers that pulled from outdated content. Nothing builds support faster than seeing the cost of inaction.

Next, outline the smallest possible set of structural improvements that would produce measurable gains. You don’t need a five-year transformation plan. You need a believable starting point.

Propose a contained, achievable fix — a pilot for cleaning key topics, a simple metadata model, or a tidy workflow that reduces version confusion. Package the request in a one-page summary that explains the problem, the underlying cause, the small investment required, and the business outcome. Keep it concise enough that someone could read it between meetings, but clear enough to make the value unmistakable.

Find Allies Who Need This As Much As You Do

You’ll also want allies. Not the kind who nod politely, but the ones who actually suffer alongside you: support leads who hate inconsistent answers, engineers who want fewer interruptions, localization teams who want stable content, product managers who want AI-ready documentation without having to understand how it works. When they see how structure helps them, they will become your best advocates.

When the time comes to demonstrate return on investment (ROI), stay grounded in metrics that matter. Show how reuse improves. How duplication drops. How AI outputs require fewer edits. How search becomes more accurate. How support volume shifts when answers get consistent. You’re not just proving that structure works — you’re showing how it delivers the outcomes AI promised but couldn’t reach alone.

Lead The Charge: AI Works When Writers Demand What It Needs

AI cannot function without the foundations writers have championed for years. And writers cannot keep pretending that “working harder” is a substitute for the structural investment AI requires. If your organization wants AI that performs with reliability, explainability, and consistency, you are uniquely positioned to guide them there.

Leading the charge for these investments isn’t self-serving. It’s responsible stewardship of the content the entire business depends on. The message you want to leave with leadership — and with yourself — is straightforward: AI succeeds when structure succeeds. And structure succeeds when technical writers stop quietly compensating for the gaps and start leading the conversation about what good actually requires. 🤠

Results From The Technical Documentation Staffing Survey

Join us for Coffee and Content with Jack Molisani, founder of ProSpring Technical Staffing and the organizer of the LavaCon Conference on Content Strategy and Technical Documentation Management. On this episode we’ll unpack the findings of the 2025 Technical Documentation Staffing Survey from The Content Wrangler and ProSpring — the most comprehensive look yet at how content teams are hiring, where they’re struggling, and what skills are most in demand.

Survey data show that while over 60% of managers face headcount authorization hurdles, many are also frustrated by a lack of candidates with structured authoring, API documentation, and AI/prompt engineering experience.

See: Call for presenters —> LavaCon 2026 — Charlotte, North Carolina

The Content Wrangler is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

We’ll explore how these realities are reshaping hiring priorities — and what it means for writers, recruiters, and managers navigating today’s competitive job market.

Expect an honest discussion about:

Which roles are most in demand (and which are fading)

Why compensation and remote work remain hot-button issues

How AI and automation are changing the skills employers value

What organizations can do to attract and retain top technical talent

Whether you’re hiring, job hunting, or simply curious where the profession is headed, this conversation offers a rare data-driven snapshot of the industry — straight from one of its leading staffing experts.

Can’t make it? Register, and we’ll send you a link you can use to watch a recording when it’s convenient for you. Each 60-minute episode of Coffee and Content is recorded live. On-demand recordings are made available shortly after the live show ends.

Coffee and Content is brought to you by The Content Wrangler and is sponsored by Heretto, a powerful component content management system (CCMS) platform to deploy help and API documentation in a single portal designed to delight your customers.

The Content Wrangler is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

[Early Bird Conference Discount] ConVEx 2026 Pittsburgh

The Center for Information Development Management (CIDM) brings its annual content development conference, ConVEx, to Pittsburgh, PA, April 13-15, 2026. You can save $300 if you register before the end of the year (end of day December 31, 2025).

In its 28th year, this event offers a wealth of ideas and information to support your efforts in defining and executing a comprehensive content strategy. ConVEx offers a great opportunity to learn, connect, and share ideas with others in the technical documentation and content strategy community and gives you a rare insider’s look at what is working (or not) for other teams.

The Content Wrangler is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Why 95% of GenAI Pilots Fail (and What Tech Writers Can Do About It)

95% of corporate generative-AI pilots are failing.

If that number surprises you, congratulations on being new to enterprise life. For everyone else — especially technical writers — it confirms what we suspected the moment someone said, “Let’s try AI! How hard could it be?”

The MIT report points to poorly prepared content, vague business goals, and content governance practices so thin you could blow on them and watch them evaporate. And underneath it all sits a truth tech writers have muttered for years: most companies are shoveling unstructured, inconsistent, poorly labeled content into AI systems and hoping for miracles.

Below is what the research found — and what technical writers can do before someone declares another AI pilot a “learning experience.”

AI Fails When Content Has the Structural Integrity of a Half-Melted Snowman

According to the MIT findings, companies toss PDFs, wikis, slide decks, and assorted mystery documents into AI tools and expect reliable answers. That’s like expecting a Michelin-star meal when the only ingredients you brought home from the grocery store are two bruised bananas and a packet of oyster crackers.

AI needs:

Structure

Clarity

Metadata

Version control

Consistent terminology

Without those, LLMs do what humans do in the same situation—guess. Badly.

Technical writers have been preaching this for decades. We knew “throw content at it and hope for the best” was not a scalable strategy long before generative AI became the world’s favorite shiny object.

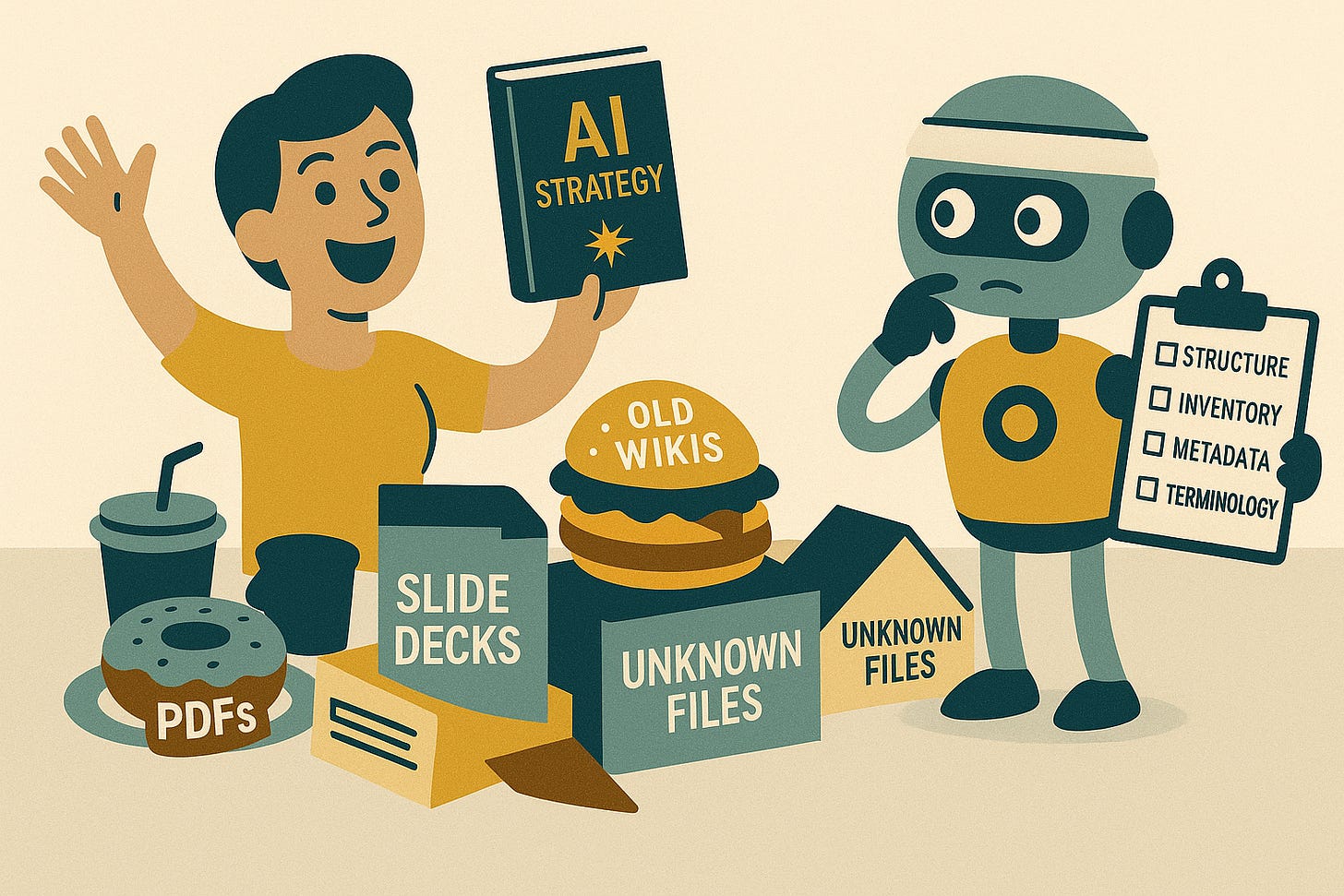

Companies Launch AI Pilots the Way People Start Diets: With Enthusiasm and No Plan

The research highlights another classic pattern: teams rush into AI because someone heard about “transformational value” during a keynote, but nobody paused to ask basic questions like:

What problem are we solving?

Is our source content consistent?

Do we even know where all our content lives?

Should we fix any of this before building the future?

By skipping content operations, organizations create pilots destined to fail — pilots that cost money, time, and at least three internal presentations where someone says “synergy” without irony.

Technical writers already build content inventories, style guides, taxonomies, and structured authoring environments. If companies involved writers earlier, they’d spend less time reporting pilot failures and more time scaling successes.

Most Pilots Don’t Fail (They Never Had a Chance to Succeed)

The MIT report shows that many AI pilots were not designed with success criteria, governance, or production-grade content. They were designed with hope. And hope—while emotionally satisfying — is not an operational strategy.

When results disappoint, leaders blame “AI limitations,” rather than acknowledging the more awkward truth: the system relied on content that looked like it had been assembled by a committee that doesn’t speak to each other.

Technical writers can help fix that by:

Designing modular, machine-friendly content

Governing terminology

Adding metadata and structure

Creating models that support retrieval and reasoning

Partnering with AI, product, and engineering teams early

In short, writers provide the discipline AI needs but cannot request politely.

AI Will Only Work When Documentation Stops Being Treated Like Emotional Labor

The MIT research frames generative AI as a strategic asset that requires operational maturity. That maturity lives in documentation teams — teams who often get involved only after pilots collapse, like firefighters arriving to discover the house was built from paper mâché.

When AI becomes part of the product (fueling chatbots, search experiences, in-app guidance, and autonomous agents) technical writers move from “nice to have” to “everything collapses without you.”

This shift requires:

Content designed for both humans and machines

Clear models, terminology, and metadata

Rigorous governance

Collaboration across disciplines

AI won’t replace writers. But AI will absolutely expose who has been ignoring writers.

What Tech Writers Can Do Now (Beyond Mildly Smirking at the 95% Statistic)

Here are six steps writers can take—practical, empowering, and yes, slightly satisfying:

Build an authoritative inventory

Identify what’s trustworthy, what’s outdated, and what should be escorted off the premises.

Advocate for structured authoring

AI thrives on clean, modular, governed content. Chaos is not a data strategy.

Establish terminology

LLMs cannot magically intuit what your team calls things. Sometimes your team cannot intuit that either.

Create agent-friendly content models

Structure and metadata turn content from “text” into “knowledge.”

Partner early

If you’re invited late, ask for a time machine. Otherwise, insist on being embedded from the start.

Document limits

Telling AI what not to answer is just as important as telling it what to answer.

AI Isn’t the Problem—Your Content Operations Are

The headline statistic—95% failure—makes it sound like AI is misbehaving.

But the truth is simple: AI cannot succeed if the content behind it isn’t designed to support it.

Companies that invest in content operations, structured authoring, terminology governance, and documentation content strategy will see AI deliver real value.

Companies that don’t will keep running pilots whose primary output is disappointment.

Technical writers are the missing ingredient—not the afterthought. And unlike most AI pilots, that’s a story with a strong chance of success. 🤠

Free Course: Become an Explanation Specialist

We explain things constantly — at work, with friends, and in our closest relationships. Every one of us is an explainer.

But most of the time, our explanations just… happen. We fall back on habits we’ve carried for years. Some people naturally communicate with clarity. Others learned pieces of the skill in school or through experience. Many struggle. And everyone — every single one of us — can get better.

That’s where the Explainer Academy comes in.

In this free course from our friends at CommonCraft, I invite you to take a fresh look at how you communicate. You’ll see that explanation isn’t a fixed trait. It’s a skill you can sharpen. And because we explain things so often, even a small improvement can make a big difference. 🤠

The Content Wrangler is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Content Operations in the Age of Artificial Intelligence

80% of organizations are using AI, but only 29% report moderate or fast progress in scaling it.

That’s one of many insights from Content Science’s latest report about the world’s largest study of content operations. In this recorded webinar, Colleen Jones and Christopher Jones, PhD, delve into what the newest research with more than 100 professionals, leaders, and executives says about the current state of content operations—and what it means for you.

Watch the on-demand recording to:

• Learn about the promising shift to more mature content operations

• Understand the new obstacles to maturing content operations maturity today

• Gain insight into what the most successful organizations do differently

• Learn why maturing content operations is the secret to success with AI

• Gain perspective from leaders with top organizations such as ServiceNow, Akoya, Red Hat, and more

Why Traceability Matters in the Generative AI Age

As organizations adopt generative AI to help create, retrieve, or transform technical content, the need for traceability has become impossible to ignore. Writers, editors, and content strategists now live in a world where AI can draft a procedure, summarize a release note, or answer a customer’s question using your documentation. That convenience comes with a new responsibility: understanding how the system arrived at its answer.

👉🏼 This is where traceability comes in.

What Traceability Means in Generative AI

Traceability is the ability to follow the path from an AI-generated output back to the ingredients that produced it.

This includes:

Which source documents the system used

Which model or model version generated the response

What prompts or instructions shaped the output

Which agents or intermediate steps contributed

How the content was transformed along the way

👉🏼 Think of it as an audit trail for generative AI.

When someone asks, “Why did the system say this?”, traceability lets you respond with specifics instead of guesses.

Why Traceability Should Matter to Technical Writers

Technical writers are already responsible for clarity, accuracy, and consistency. Generative AI doesn’t change that — it amplifies it. When AI enters the workflow, writers must ensure the system uses the right source content and produces outputs that reflect the approved truth.

👉🏼 Traceability makes that possible.

With traceability, you can confirm whether an answer came from a vetted topic or from an outdated draft lurking in a forgotten folder. You can determine whether the system hallucinated a detail or faithfully retrieved information from your component content management system (CCMS). You can see which metadata tags influenced retrieval and whether an agent followed your instructions or drifted off course.

Without traceability, you’re left guessing — not a great position when accuracy is part of your job description.

The Role of Traceability in Governance and Compliance

Many writers work in environments where rules matter: medical devices, finance, transit, telecom, cybersecurity, manufacturing. In these contexts, every published statement must be backed by a controlled source.

Related: The Ultimate Guide to Becoming a Medical Device Technical Writer

As AI becomes part of content production, reviewers, auditors, and regulators will ask legitimate questions:

Was this answer based on approved documentation?

Did the system use any restricted content?

Can you prove the output followed the organization’s processes?

👉🏼 Traceability provides the evidence.

It becomes a natural extension of version control, metadata, review workflows, and other content governance practices writers already use.

Traceability Helps You Debug AI Systems

When a retrieval-augmented generation (RAG) system or an AI agent produces something incorrect, traceability tells you where the failure occurred.

Maybe the retrieval logic surfaced the wrong topic

Maybe the metadata on a source file was inaccurate

Maybe an AI agent misinterpreted the instruction chain

👉🏼 Traceability turns debugging into a structured process instead of a guessing game.

This matters for writers because you’re often the first person asked to evaluate whether an AI-generated answer is correct — and the first person blamed if it isn’t.

With traceability, you can pinpoint the issue, fix the source, and improve the system.

Protecting the Integrity of Your Documentation

Writers spend years building controlled vocabularies, maintaining topic libraries, preserving intent, and making sure content stays consistent. Generative AI systems, by design, remix information. That remixing can introduce subtle distortions unless you can see which sources were used and how the system transformed them.

Traceability helps you catch:

Pulls from outdated drafts

Blends of two similar topics

Responses formed from misinterpreted metadata

Answers based on content that never passed review

👉🏼 It gives writers back the visibility they lose when AI systems automate pieces of the workflow.

Preparing for AI-Driven Content Operations

As CCMS platforms and documentation tools integrate AI, traceability will become part of the content lifecycle.

Future authoring and publishing workflows will include:

Metadata showing which topics influenced an AI-generated suggestion

Logs showing which versions of a topic were used

Audit trails showing how an AI assistant arrived at its edits or summaries

Review tools that highlight AI-originated content for approval

👉🏼 Writers who understand traceability will be positioned to help design these workflows, maintain the content behind them, and ensure trustworthy outputs.

What This Means for Technical Writers

Traceability is the safety layer that makes generative AI viable in professional documentation. It gives technical writers the ability to confirm accuracy, protect content integrity, debug AI behavior, and meet governance expectations.

Without it, AI becomes a black box — impressive, fast, and completely untrustworthy.

With it, AI becomes a powerful extension of your content operations. Writers remain in control. Documentation stays aligned with the truth. Teams can scale with confidence. 🤠

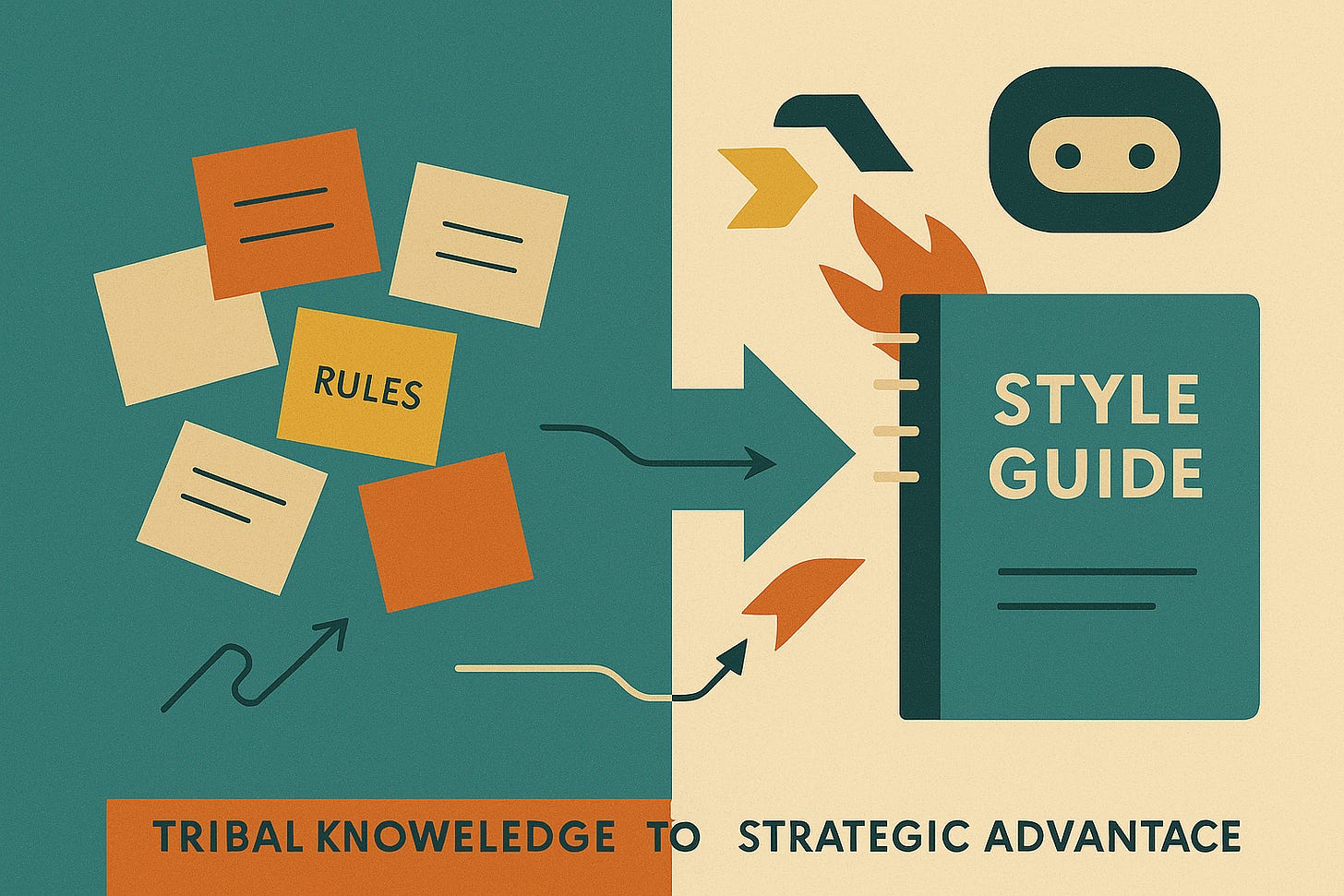

How to Retroactively Build a Technical Documentation Style Guide Using AI — And Use It to Spot Content Gaps

Technical writers know the pain of “tribal knowledge” all too well. Small teams often share unwritten rules about tone, formatting, terminology, and compliance expectations. Over time, those unwritten norms become invisible — but new writers, contractors, and especially AI tools need those rules documented.

The good news: modern AI systems can help you extract a style guide directly from your existing content and use it to identify what’s missing in your documentation.

In this short from the webinar “Leveling Up: From AI Table Stakes to AI Automation,” host Melanie Denise Davis (“The AI Wrangler”) talks with AI expert and trainer, Bill Raymond, about how teams can retroactively build a usable style guide by leveraging AI. Raymond explains that modern AI tools — such as Microsoft Copilot, ChatGPT, Claude, and Gemini — can connect directly to a team’s existing documentation, analyze patterns, and generate an initial style guide from published content.

He emphasizes that this draft is only a starting point: writers can iterate by correcting tone, removing unintended patterns (like sarcasm), and adding organization-specific rules such as compliance requirements and approved citation sources. Once refined, the guide can be downloaded and used as a formal reference.

Raymond also notes that once AI understands the team’s content and style, it can help identify gaps, compare coverage against competitors, and generate practical roadmaps—such as what to produce in the next two weeks or two months—making AI a powerful partner in turning tribal knowledge into a strategic content advantage

Here’s a short review of some of the advice he shared during the show.

Connect AI to Your Existing Docs

Today’s leading AI tools — such as Microsoft Copilot, ChatGPT, Claude, and Gemini — can all connect to your documentation repositories. Once connected, the model can read your published content and begin to infer the patterns your team follows.

If you’ve never created a formal style guide, you can give the AI a simple instruction:

“Review these documents and create a style guide based on them.”

The model will scan your content and produce an initial draft. It won’t be perfect, but that’s the point — you now have something concrete to refine.

Iterate and Correct the AI’s Draft

AI-generated style guides sometimes surface unexpected rules. If the model finds a sarcastic sentence or two in your archives, it may conclude that sarcasm is part of your brand voice.

With a chatbot, you can interrogate and correct it:

“We don’t use sarcasm. Remove that rule.”

“We are a compliance-oriented organization. Add requirements for legal citations from our approved sources.”

This iterative process lets you shape the AI’s output into an accurate reflection of your team’s expectations. When you’re satisfied, you can export the document as your official corporate style guide.

Use the Style Guide and AI Together to Identify Gaps

Once the AI understands your style, your content, and your rules, you can take the next step: ask it what’s missing.

Because the model now has full visibility into what you’ve published, it can answer questions like:

“What topics are competitors covering that we are not?”

“What gaps exist in our current documentation set?”

With those insights, you can begin structured brainstorming. For example:

“Given a two-month timeline, what can I realistically create?”

“What could I produce in the next two weeks?”

“What should be completed by the end of two months?”

The model will generate a prioritized, reasonable plan based on your resources — whether you’re a team of one or many.

From Tribal Knowledge to Strategic Advantage

AI can help you transform undocumented norms into a structured, maintainable style guide. And once that guide exists, the same AI can surface content gaps, suggest improvements, and help you plan your next steps.

By letting your published work teach the AI what “good” looks like — and then refining it — you turn your accumulated content into a source of clarity, consistency, and strategic insight for your technical writing practice. 🤠